A System API is designed to retrieve data from a backend system that has scalability challenges. What API policy can best safeguard the backend system?

A.

IPwhitelist

B.

SLA-based rate limiting

C.

Auth 2 token enforcement

D.

Client ID enforcement

SLA-based rate limiting

Explanation: Explanation

Correct Answer: SLA-based rate limiting

*****************************************

>> Client Id enforement policy is a "Compliance" related NFR and does not help in

maintaining the "Quality of Service (QoS)". It CANNOT and NOT meant for protecting the

backend systems from scalability challenges.

>> IP Whitelisting and OAuth 2.0 token enforcement are "Security" related NFRs and again

does not help in maintaining the "Quality of Service (QoS)". They CANNOT and are NOT

meant for protecting the backend systems from scalability challenges.

Rate Limiting, Rate Limiting-SLA, Throttling, Spike Control are the policies that are "Quality

of Service (QOS)" related NFRs and are meant to help in protecting the backend systems

from getting overloaded.

https://dzone.com/articles/how-to-secure-apis

Refer to the exhibit.

A developer is building a client application to invoke an API deployed to the STAGING

environment that is governed by a client ID enforcement policy.

What is required to successfully invoke the API?

A.

The client ID and secret for the Anypoint Platform account owning the API in the STAGING environment

B.

The client ID and secret for the Anypoint Platform account's STAGING environment

C.

The client ID and secret obtained from Anypoint Exchange for the API instance in the

STAGING environment

D.

A valid OAuth token obtained from Anypoint Platform and its associated client ID and

secret

The client ID and secret obtained from Anypoint Exchange for the API instance in the

STAGING environment

Explanation: Explanation

Correct Answer: The client ID and secret obtained from Anypoint Exchange for the API

instance in the STAGING environment

*****************************************

>> We CANNOT use the client ID and secret of Anypoint Platform account or any individual

environments for accessing the APIs

>> As the type of policy that is enforced on the API in question is "Client ID Enforcment

Policy", OAuth token based access won't work.

Right way to access the API is to use the client ID and secret obtained from Anypoint

Exchange for the API instance in a particular environment we want to work on.

References:

Managing API instance Contracts on API Manager

https://docs.mulesoft.com/api-manager/1.x/request-access-to-api-task

https://docs.mulesoft.com/exchange/to-request-access

https://docs.mulesoft.com/api-manager/2.x/policy-mule3-client-id-based-policies

An organization wants MuleSoft-hosted runtime plane features (such as HTTP load balancing, zero downtime, and horizontal and vertical scaling) in its Azure environment. What runtime plane minimizes the organization's effort to achieve these features?

A.

Anypoint Runtime Fabric

B.

Anypoint Platform for Pivotal Cloud Foundry

C.

CloudHub

D.

A hybrid combination of customer-hosted and MuleSoft-hosted Mule runtimes

Anypoint Runtime Fabric

Explanation: Explanation

Correct Answer: Anypoint Runtime Fabric

*****************************************

>> When a customer is already having an Azure environment, It is not at all an ideal

approach to go with hybrid model having some Mule Runtimes hosted on Azure and some

on MuleSoft. This is unnecessary and useless.

>> CloudHub is a Mulesoft-hosted Runtime plane and is on AWS. We cannot customize to

point CloudHub to customer's Azure environment.

>> Anypoint Platform for Pivotal Cloud Foundry is specifically for infrastructure provided by

Pivotal Cloud Foundry

>> Anypoint Runtime Fabric is right answer as it is a container service that automates the

deployment and orchestration of Mule applications and API gateways. Runtime Fabric runs

within a customer-managed infrastructure on AWS, Azure, virtual machines (VMs), and

bare-metal servers.

-Some of the capabilities of Anypoint Runtime Fabric include:

-Isolation between applications by running a separate Mule runtime per application.

-Ability to run multiple versions of Mule runtime on the same set of resources.

-Scaling applications across multiple replicas.

-Automated application fail-over.

-Application management with Anypoint Runtime Manager.

Reference: https://docs.mulesoft.com/runtime-fabric/1.7/

An IT Security Compliance Auditor is assessing which nonfunctional requirements (NFRs)

are already being implemented to meet security measures.

A. The API invocations are coming from a known subnet range

B. Username/password supported to validate login credentials

C. Sensitive data is masked to prevent compromising critical information

D. The API is protected against XML invocation attacks

E. Performance expectations are to be allowed up to 1,000 requests per second

A REST API is being designed to implement a Mule application.

What standard interface definition language can be used to define REST APIs?

A.

Web Service Definition Language(WSDL)

B.

OpenAPI Specification (OAS)

C.

YAML

D.

AsyncAPI Specification

OpenAPI Specification (OAS)

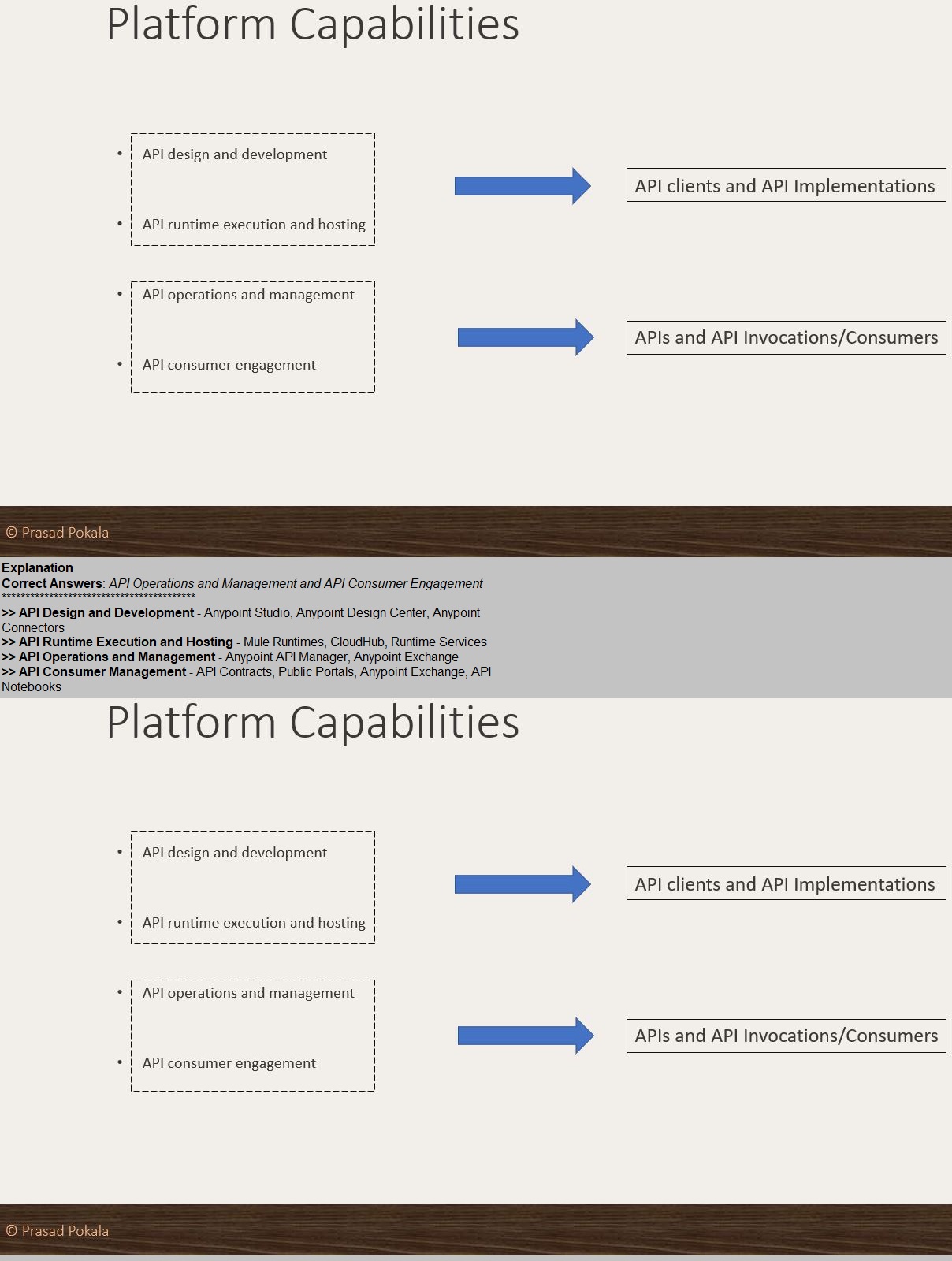

What Anypoint Platform Capabilities listed below fall under APIs and API

Invocations/Consumers category? Select TWO.

A.

API Operations and Management

B.

API Runtime Execution and Hosting

C.

API Consumer Engagement

D.

API Design and Development

API Design and Development

Explanation: Explanation

Correct Answers: API Design and Development and API Runtime Execution and Hosting

*****************************************

>> API Design and Development - Anypoint Studio, Anypoint Design Center, Anypoint

Connectors

>> API Runtime Execution and Hosting - Mule Runtimes, CloudHub, Runtime Services

>> API Operations and Management - Anypoint API Manager, Anypoint Exchange

>> API Consumer Management - API Contracts, Public Portals, Anypoint Exchange, API

Notebooks

An organization has several APIs that accept JSON data over HTTP POST. The APIs are

all publicly available and are associated with several mobile applications and web

applications.

The organization does NOT want to use any authentication or compliance policies for these

APIs, but at the same time, is worried that some bad actor could send payloads that could

somehow compromise the applications or servers running the API implementations.

What out-of-the-box Anypoint Platform policy can address exposure to this threat?

A.

Shut out bad actors by using HTTPS mutual authentication for all API invocations

B.

Apply an IP blacklist policy to all APIs; the blacklist will Include all bad actors

C.

Apply a Header injection and removal policy that detects the malicious data before it is used

D.

Apply a JSON threat protection policy to all APIs to detect potential threat vectors

Apply a JSON threat protection policy to all APIs to detect potential threat vectors

Explanation: Explanation

Correct Answer: Apply a JSON threat protection policy to all APIs to detect potential threat

vectors

*****************************************

>> Usually, if the APIs are designed and developed for specific consumers (known

consumers/customers) then we would IP Whitelist the same to ensure that traffic only

comes from them.

>> However, as this scenario states that the APIs are publicly available and being used by

so many mobile and web applications, it is NOT possible to identify and blacklist all

possible bad actors.

>> So, JSON threat protection policy is the best chance to prevent any bad JSON payloads

from such bad actors.

The asset version 2.0.0 of the Order API is successfully published in Exchange and configured in API Manager with the Autodiscovery API ID correctly linked to the API implementation, A new GET method is added to the existing API specification, and after updates, the asset version of the Order API is 2.0.1. What happens to the Autodiscovery API ID when the new asset version is updated in API Manager?

A. The API ID changes, but no changes are needed to the API implementation for the new asset version in the API Autediscovery global element because the API ID is automatically updated

B. The APL ID changes, so the API implementation must be updated with the latest API ID for the new asset version in the API Autodiscovery global element

C. The APLID does not change, so no changes to the APT implementation are needed for the new asset version in the API Autodiscovery global element

D. The APL ID does not change, but the API implementation must be updated in the AP] Autodiscovery global element to indicate the new asset version 2.0.4

Explanation:

Understanding API Autodiscovery in MuleSoft:

Effect of Asset Version Update on API Autodiscovery:

Evaluating the Options:

| Page 2 out of 19 Pages |

| Mulesoft MCPA-Level-1 Exam Questions Home |